Token economics 101

Tokens are the currency of AI

TLDR

Making tokens cheaper won’t scale if smarter models just tempt us into longer, “think harder” sessions. As always, the 80/20 rule applies, with electricity prices a serious chokepoint.

The honest math of reasoning scalability is price × tokens × sessions (nudged by smarter reasoning processing on the back-end). That curve only bends so far.

The application layer has already suffered from this. The winner takes all, VC backed growth at all cost strategy is wearing thin. As we approach bubble territory, if investors shift from revenue to profitability, and if no true feasible pricing strategy emerges, we may never get AI.

Until there’s a true 10× efficiency jump or most queries run on local machines, expect unstable pricing models.

Ten times cheaper, fifty times more tokens

I’m sick of people using Moore’s law to argue for models becoming ten times cheaper next year.

Maybe they will.

It still doesn’t pencil out.

Cloud-only, deep-reasoning-by-default consumer assistants struggle to clear margin at scale. Token economics can’t support the way we actually want to use it.

In my rant, I’m going deep. Come along if you dare. Forewarning - there’s a little math in this one.

I promise it’s worth holding on.

Let’s start with token cost

I had a conversation the other day with a normie.

What I mean by that, is someone who doesn’t spend an unhealthy amount of time with AI. I had to explain what a token was. Might be useful here too, if you identify as one (love that you’re here, by the way).

Basically, a token is a tiny slice of text.

Every time you fire off a prompt like “What’s wrong with me” or “Is it normal if I…” (I see you, trauma dumper), two meters spin:

What the model reads (input), and

What it writes (output).

Providers like OpenAI or Anthropic usually bill both. Output is often pricier than input because long, careful writing requires more computation and inference. If you’re on a flat price plan, you won’t see the meter, but that doesn’t mean it doesn't exist. Someone has to cover the bill.

Oh, important nuance here I want to make sure you understand… tokens aren’t the cost.

The cost is the compute required to process tokens (math on GPUs, memory moves, retrieval hops, safety passes, logging, storage, all the super techie stuff). Tokens are an effective measurement that helps derive a common baseline for measurement.

The shape of a useful session

The real problem isn’t price-per-million tokens.

Non-reasoning models answer in a straight line: you ask, they reply. Predictable, but shallow.

Question. Answer. No need to ‘think’ about how to approach an answer.

When AI first came on the scene, pricing AI as a token made sense, and arguably was relatively predictable (within a margin).

Then came reasoning.

Reasoning models think in steps. They take time to understand the question.

They try to understand what you’re wanting as an outcome and think through various steps to get to the answer. Ultimately, they plan, reflect, call a function, re-check, then write. That “care” is what makes hard problems solvable.

It also generates lots of tokens you never read and encourages longer outputs (examples, code, citations, “why”). You’ve probably seen the “now I am doing this, now I am doing that, I need to now do this, hmm that didn’t work” logic chain in your chats. Since output tokens are typically priced higher, useful often means expensive.

Providers do try to fight the curve.

They try to reuse shared prompt scaffolds, re-embed repeated chunks, and batch workloads so the incremental cost of a long answer is less than “start from zero.” But even with those tricks, longer, careful outputs are where the money goes.

Context is a price floor

In this, it's the context that raises the price floor.

We’re all guilty of pasting in a contract, a repo, three PDFs and a brief. Large context windows kinda feel like magic. The model must read what you paste. The more context, the larger the risk of hallucination or failure. Each retry drags the whole history forward, making the next try pricier.

Cha-ching, cha-ching, cha-ching.

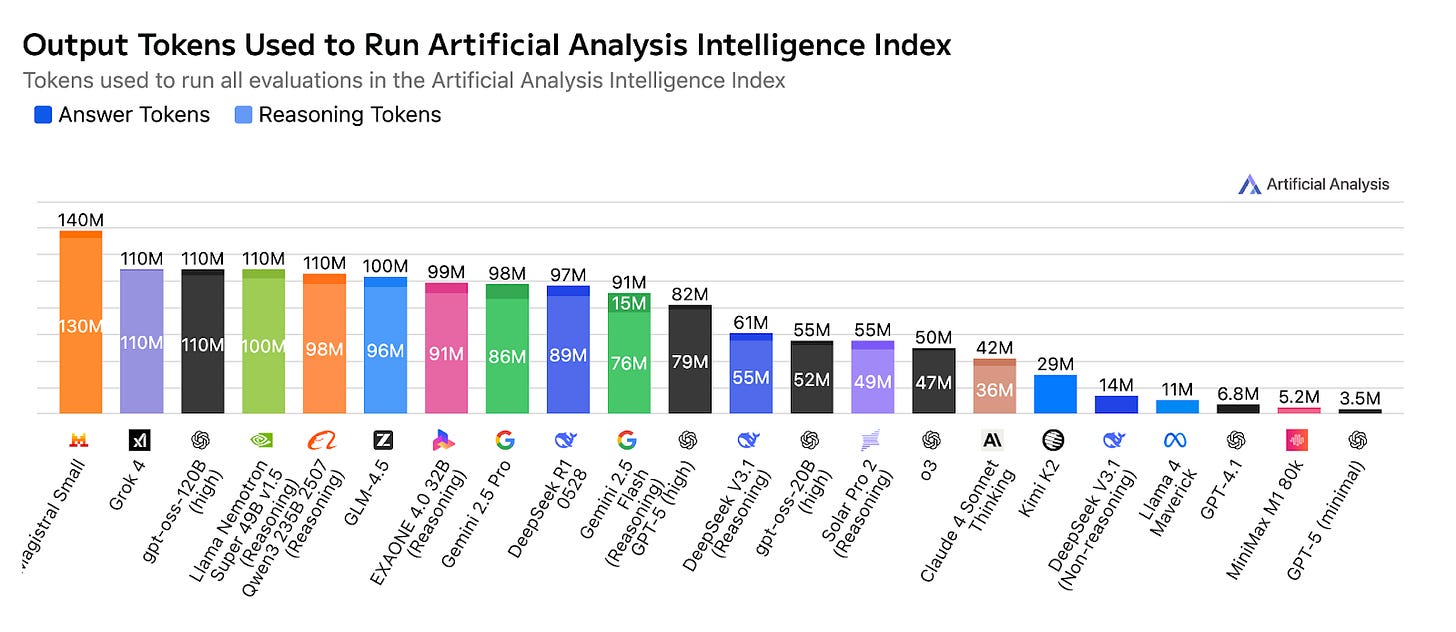

As models get better, they do more, which means more tokens, not fewer. Ten times cheaper, fifty times more tokens used.

The math cancels.

As you can see, the frontier models still cluster in the expensive corner.

The OG glazer, the golden child, the ol’ faithful GPT-4o sits around the high single digits per million tokens on a blended basis.

But Blake, your whole argument is invalid as cheaper models exist.

Yes. and no.

Realistically, people still reach for the top model when the work matters.

Let’s make it personal… I can’t be the only one that gets a little bit annoyed if ChatGPT answers me too quickly, without thinking. It’s like it didn’t really try?

No one wants GPT-5. They want GPT-5 Thinking.

The logic of application pricing

Three levers decide the bill:

U: unit price per token (what you pay)

T: tokens per session (how much “thinking + writing” each answer needs)

S: sessions per user (how often people come back)

Therefore, the cost per user = U × T × S.

Now add the provider’s hidden lever:

E: efficiency (how much work they squeeze out per visible token via caching, batching, faster kernels)

Raising E lowers cost even when your visible tokens don’t change.

But if T x S explodes (deeper chains, more habit), cheaper U and better E often get swamped.

Here’s the dirty secret providers don’t want you to know.

Price (U) can fall.

Use (T x S) usually rises as quality improves.

When T x S pulls harder than U and E combined, the total bill still goes up.

So far, this has always been the case.

The math ain’t ‘mathing’.

The price wars in the application layer

We’re seeing the false economics in pricing AI native applications.

Different wrappers, same problem.

Cursor, Claude Code, Codex, Lovable.

When the product starts to think, the only lever you can show publicly is price, so you pull it. Meanwhile, the two invisible levers, tokens (t) and sessions (s) spike.

Let’s run the four standard plays for competing business’ and why they still lose in broad consumer land.

Usage-based vs usage-based

Consumers keep a watchful eye on the usage meter. People end up rationing their prompts. As experimentation declines due to cost concerns, growth stalls. The applications try to cut prices to look approachable, but the big tasks stay expensive. Margins get thinner and thinner and thinner. We saw this play out in our short math lesson above.

Flat pricing vs flat pricing

This is the common pattern that we’ve seen so far. Until it wasn't feasible. For consumers, it was party time, until heavy users dominated costs. The 1% of ‘super users’ chewed through tokens at an impressive rate, ruining the party for the rest. Margins diminished, caps were added and prices were hiked. Trust drops. New competitors come in to try to capture the opportunity.

The cycle continues.

Metered usage (capped) vs a flat subscription

Simply put, without a 10x improvement in experience compared to competitors, metered usage will lose signups. The value for the consumer in terms of usage is too great, unless joining the great subsidy war. For a time.

Flat subscription vs Metered usage (capped)

Literally the other side of the above, but with a different time scale.

Flat subscription will win adoption early and get crushed by heavy users. The flat subscription will have to add caps. Same ending. Lower prices on the page, higher costs behind the page. Token economics will always win in the end.

Some interesting pricing strategies have emerged, time will tell.

I’ve seen some applications charge for outcomes, not tokens. Examples like charge per bug fixed, claim adjudicated, lead qualified, call deflected. If the task saves $100, paying $10 works.

Tier the “thinking.” I’ve seen models give everyone ‘good’ answers. The value remains. But the applications reserve “think really hard” and giant contexts for higher tiers with honest limits. Users still get value but costs don’t spiral.

Use the device for the easy/medium. Let phones/PCs do short, private, latency-sensitive jobs. When long, tool-heavy, cross-document jobs are required, sent to the cloud. The average cost drops even though the biggest jobs still cost real money.

These might hold if one or two of following cases become true:

Efficiency (E) improves by >10× while quality holds steady.

>70% of consumer queries run on-device at acceptable quality.

Outcome-priced agents hit >50% automation with <5% rework.

Until then, we’re selling magic while trying not to show the meter.

The magic is real but the meter is too.

Until next time.

Blake