AI, can you be my friend?

Remember when we thought AI would replace our jobs? Plot twist: it's replacing our therapists instead.

I’ll start with the scariest statement I have heard from a tech CEO in a long time.

”AI friends might be the solution to Americans having fewer than three close friends on average” - Mark Zuckerberg

We're Redefining Human Connection

I’ll be honest… I didn’t see this coming.

For better or worse, we're making relationships with non-human entities the norm. Are we prepared for the psychological and social implications?

Probably not.

Here’s what the data from Harvard Business Review has shown us so far:

In twelve months the use of AI flipped from tool to therapist.

Coding help slid down the charts while “talk me through my panic spiral” shot to #1.

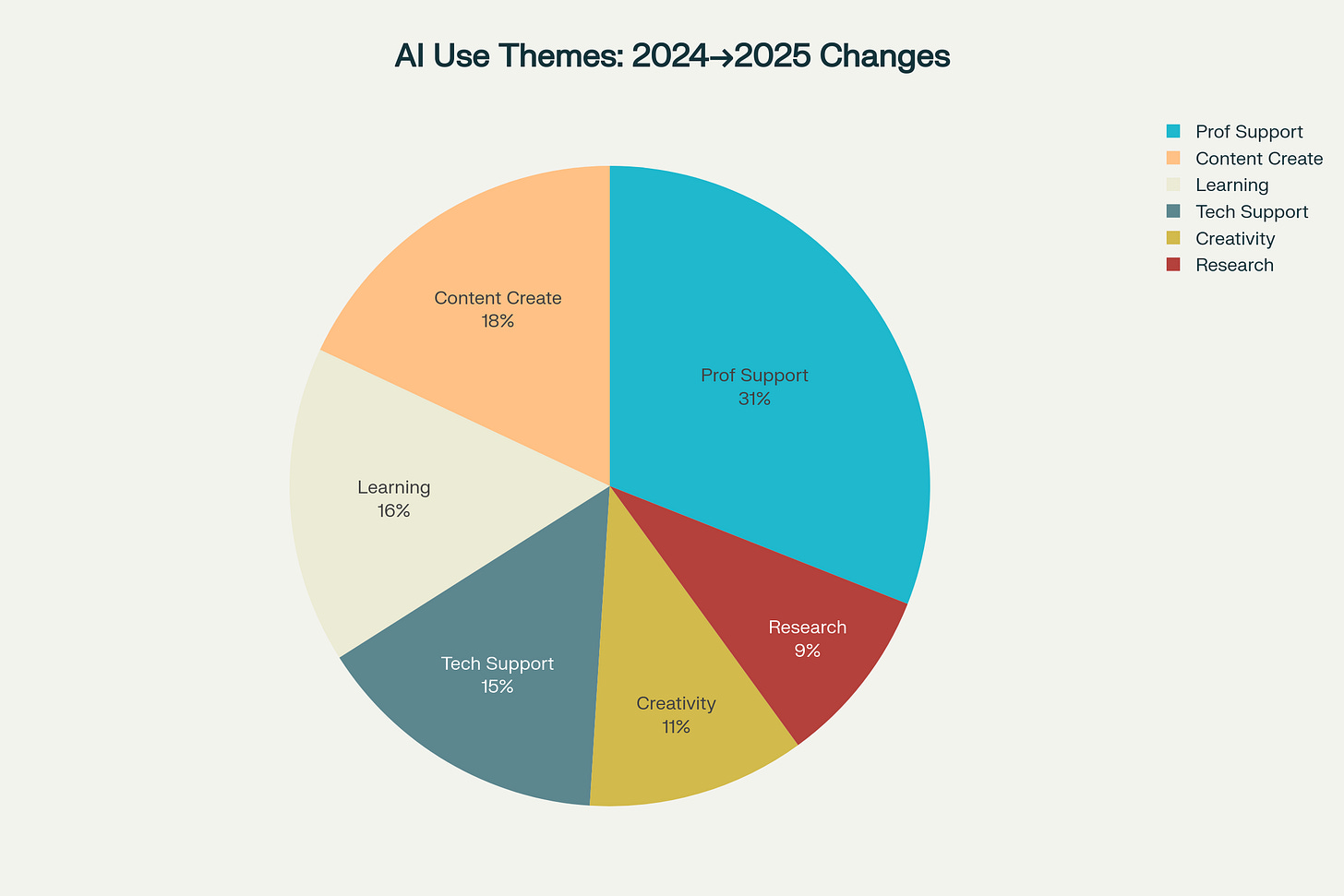

Personal & Professional Support jumping from 17 percent of interactions in 2024 to 31 percent in 2025.

We stopped asking “How do I fix this?”

And started asking “Can you sit with me while I unravel a little bit?”

In fact, I made a chart from the HBR data, to show some of the changes in how we use AI, from 2024 →2025.

From Search Engine to Soul Engine

The technical use cases aren't just declining, they're being actively replaced by emotional ones.

What died in 2024:

Specific search (fell off the top 10)

General advice (completely eliminated)

Troubleshooting (down significantly)

What exploded in 2025:

Therapy/companionship (#1)

Structuring my life (#2)

Finding purpose (#3)

Enhanced learning (#4)

The change is serious:

Specific search dropped out of the top 10 entirely (RIP to all those "best pizza near me" queries)

General advice also got the boot from the top 10

Personal & Professional Support exploded from 17% to 31% of all AI interactions

The U.S. Surgeon General called it a public health epidemic.

Worse than obesity. Worse than smoking 15 cigarettes a day. That was 2023.

By 2025, people stopped looking for friends. They started opening ChatGPT instead. That’s not me rambling. That’s just the data. The numbers tell a story of a society desperately seeking connection:

100 million people worldwide now use AI companions regularly

50% of adults reported experiencing loneliness before COVID (it's only gotten worse)

61% of young adults experience serious loneliness, according to Harvard studies

What does that mean for our future?

The three Blake’s sitting on my shoulder right now tell me this:

The optimistic take: AI is an incredible supplementary emotional support, making it available 24/7 to anyone with a smartphone. This could be a bridge to help people develop confidence for human relationships.

The pessimistic take: We're creating a generation that's more comfortable confiding in algorithms than humans, potentially deepening the very isolation we're trying to solve.

The realistic take: Like most technological revolutions, this one will probably be both terrible and wonderful in ways we can't yet imagine (Not an incredibly helpful take, I know!)

What is undeniably true

AI friends don’t challenge you.

They don’t push back.

They don’t have rough days.

They never misinterpret.

And they never, ever leave (phew..)

Which means they’re not relationships.

They’re reflections.

If I only ever talk to a reflection, I don’t grow. I just reinforce.

And that scares me.

So, the next time someone asks you what people actually use AI for, don't say "productivity" or "coding." Say "emotional support" and "finding meaning." Apparently, in 2025, we're all just trying to figure out this whole "being human" thing, and we've decided our best shot is asking a computer for help.